MNIST Muddle

- Divakar V

- May 9, 2021

- 2 min read

Creating poorly written numeric digits using AutoEncoders and MNIST dataset.

Demo website - LINK

GitHub link - LINK

Contents:

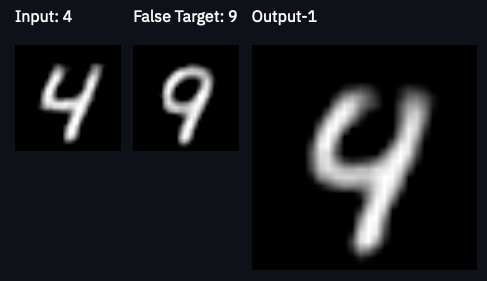

Project aim: Pick an input image of a given digit from MNIST dataset and transform it in a way that it looks like another digit. An output image will be considered good if:

Difficult to tell what digit it is.

Still looks like a digit.

Hard to choose a category between 2 labels.

Example of good output: Transforming '4' to look like something between '4' & '9'

Project Motivation: I did this dummy project to get a practical exposure to:

Auto Encoders

Latent domain

Hosting apps using streamlit

PyTorch hands-on

For the love of images!

Details and Approach:

The project is based upon MNIST dataset - a standard ML dataset containing handwritten grayscale images of size 28x28 pixels.

Step-1: Train an AutoEncoder model

A Convolutional Auto-Encoder was used for the purpose. Details:

Encoder - 4 convolutional blocks followed by a dense layer.

Latent vector dimension - Vector of size 10

Decoder - A dense layer followed by 4 De-Convolutional blocks

Checkout the github repo for training details of AutoEncoder.

Step-2: Use the learnt latent vector representation from above

Pre-computation:

Compute average latent vector for all the 10 labels (0-9). It is used for finding which of these clusters (labels) is closest to the input image's latent vector. Result of this step is a (10x10) matrix. As mentioned above, size of latent vector is 10.

Steps to generate output image:

1. Next, we pick a random image 'a' from the data corresponding to the selected label [0-9] and compute its latent vector 'l1'.

2. We then find the nearest cluster to l1 in the latent domain using the precomputed matrix.

3. We randomly pick another image 'b' from the nearest cluster above and generate it's latent vector 'l2'.

4. The resultant latent vector 'l_avg' is the average of 'l1' and 'l2'. Note - There is a big assumption here! (described below).

5. Generate (using model's decoder) the output image from the latent vector 'l_avg' computed above.

Assumptions

Clusters are distinct and mutually exclusive. (Not always the case)

Nearest clusters in latent representation are similar looking in image domain. (Not always true)

No False positive images in the training set. (Not true. Dataset is dirty)

Taking average of 2 latent vectors present in 2 different clusters will result in a latent vector which lies in the empty space between those clusters. Hoping that image generated from this latent vector looks somewhere between those 2 digits.

Further thoughts:

Instead of picking the middle (avg) latent vector of l1 & l1, generate a series of outputs. Then pick the output for which mse(Oi, img_a) + mse(Oi, img_b) is maximum. In other words, it is far from both img_a & img_b

Comments